Introduction

Welcome to the next blog in our series, where we explore real-world examples of Kafka and Airflow implementation. In this blog, we will investigate Kafka and Airflow at Twitter, the popular social media platform. By utilizing Kafka for real-time data ingestion and streaming and Airflow for workflow management, Twitter efficiently captures and analyzes a massive volume of tweets, enabling them to deliver a real-time and engaging user experience.

Overview of Twitter’s Data Pipeline

Twitter’s data pipeline is a critical component of its platform, capturing and processing a vast amount of user-generated data. Real-time data ingestion and streaming are fundamental to their pipeline architecture. Twitter continuously ingests tweets, user interactions, and platform events to gain insights into user behavior, trending topics, and real-time conversations. Efficient data processing and analysis empower Twitter to provide timely recommendations, personalized content, and deliver an engaging user experience.

Kafka Implementation at Twitter

Kafka plays a pivotal role in Twitter’s data pipeline by enabling real-time data ingestion and streaming. Twitter utilizes Kafka to ingest and process the massive volume of tweets and user interactions generated on its platform. Kafka’s distributed architecture ensures scalability and fault tolerance, allowing Twitter to handle high data throughput. Twitter leverages Kafka Streams for real-time analysis and processing of tweet streams, enabling them to identify trending topics, detect anomalies, and extract valuable insights. Kafka’s integration with other data processing frameworks and machine learning platforms empowers Twitter to perform advanced analytics and drive data-driven decisions.

Airflow Implementation at Twitter

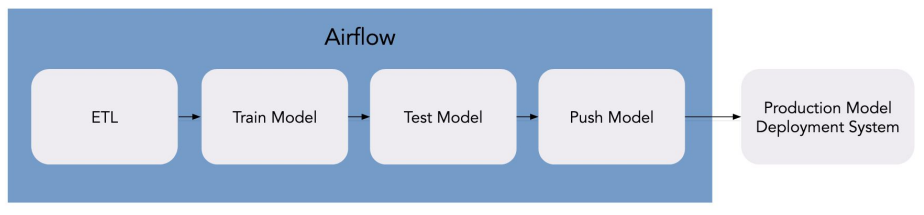

Airflow serves as a vital tool in managing and scheduling data workflows at Twitter. Twitter utilizes Airflow to orchestrate its data processing tasks and ensure efficient data pipeline operations. Airflow’s DAG-based approach enables Twitter to define complex dependencies between tasks and schedule their execution.

Typical ML Pipelines with Airflow are structured in this way.

source: https://airflowsummit.org/slides/b1-Twitter-Dan.pdf

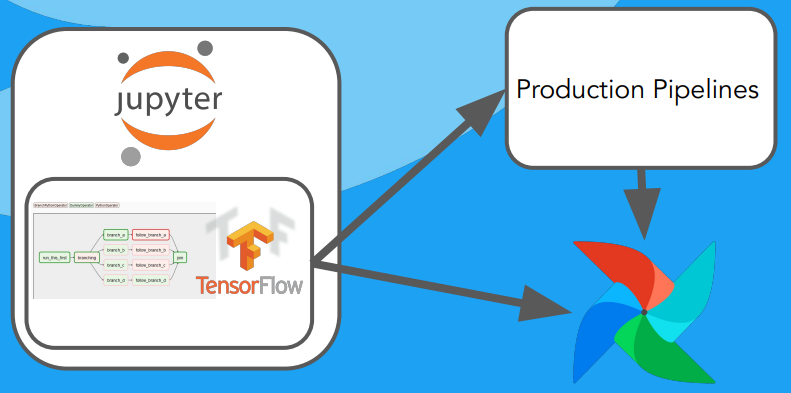

However, at Twitter, they use Airflow with TensorFlow to manage AI/ML/LLM pipelines.

source: https://airflowsummit.org/slides/b1-Twitter-Dan.pdf

With Airflow, Twitter schedules tasks such as data cleaning, sentiment analysis, and recommendation algorithms. Airflow’s extensibility allows Twitter to integrate custom operators and sensors to meet their specific data processing needs.

Airflow’s intuitive user interface and monitoring capabilities provide Twitter’s teams with visibility into workflow progress, task performance, and issue resolution, ensuring the smooth execution of their data pipeline.

HOW IT WORKS:

- User Log-In:

- Users access the Twitter platform and log in using their credentials.

- The login request is authenticated and validated by the Twitter authentication system.

- Kafka Data Ingestion:

- Kafka is responsible for ingesting and capturing real-time data, including user interactions and tweet events.

- User login events are produced as messages in Kafka for further processing.

- Workflow Management with Airflow:

- Airflow manages the workflow of processing user login events and related tasks.

- It schedules and triggers the necessary tasks and data processing steps based on predefined workflows and dependencies.

- User Interaction Analysis:

- Within the workflow triggered by Airflow, user interaction events, such as tweet likes, retweets, and follows, are processed.

- These events are consumed from Kafka and analyzed to generate user activity metrics, trends, and recommendations.

- Sending Tweets:

- Users compose and send tweets through the Twitter platform.

- The tweet is sent to the Twitter server infrastructure for processing.

- Kafka Tweet Streaming:

- The tweet sent by the user is captured as an event and produced as a message in Kafka for real-time processing and analysis.

- Kafka streams consume the tweet events, enabling real-time processing of user-generated content.

- Browse and Scroll Tweet Recommendations:

- Twitter generates tweet recommendations for users based on their interests, followers, and previous interactions.

- Airflow triggers workflows that fetch and process tweet recommendations for each user.

- The recommendations are produced as messages in Kafka and consumed by the Twitter platform to populate the user’s timeline.

- Streaming and Real-time Updates:

- Kafka streams continuously consume tweet events and process them within Twitter’s data infrastructure.

- Real-time updates, such as new tweets from followed accounts or recommended tweets, are pushed to the user’s timeline for immediate display.

- Notifications:

- Twitter sends various notifications to users, including mentions, replies, direct messages, and account activity updates.

- Notification events are produced as messages in Kafka based on specific triggers or events.

- Kafka consumers process the notification events and deliver them to the user’s device through push notifications or in-app notifications.

Benefits and Impact at Twitter

The implementation of Kafka and Airflow brings substantial benefits to Twitter’s data pipeline. Kafka’s real-time data ingestion and streaming capabilities enable Twitter to capture and process a massive volume of tweets and user interactions in real-time. This empowers Twitter to deliver trending topics, personalized content, and real-time recommendations to its users.

Kafka’s fault tolerance ensures data reliability even during peak usage periods. Airflow’s workflow management capabilities provide Twitter with efficient task scheduling, enabling timely data processing and analysis. Airflow’s monitoring features allow Twitter’s teams to track workflow progress and performance, and quickly identify and resolve any issues, ensuring a robust and responsive data pipeline.

Conclusion

In this blog, we explored how Twitter incorporates Kafka and Airflow in its data pipeline. By leveraging Kafka for real-time data ingestion and streaming and Airflow for workflow management, Twitter efficiently captures and analyzes a massive volume of tweets, delivering a real-time and engaging user experience. If you’re interested in implementing these technologies in your own data pipelines, contact Anant Corporation to leverage our expertise and customized solutions. Stay tuned for the next blog in our series, where we will delve into real-world examples of Kafka and Airflow implementation at Slack.

Anant’s Role and Expertise

As an experienced provider of Real-Time Data Enterprise Platforms, Anant Corporation plays a crucial role in helping enterprises incorporate Kafka and Airflow into their custom data pipelines. Our extensive expertise in data pipeline processes and our Data Lifecycle Management Toolkit (DLM) simplify and accelerate the implementation of these technologies. Anant’s expert guidance, customization, troubleshooting, and support services ensure seamless integration, optimized performance, and continuous improvement of data platforms. Contact Anant to leverage our expertise and achieve success with your data pipeline projects.