By Lili Kazemi

For a long time, AI regulation lived in the future tense.

It’s coming.

We’ll deal with it later.

Let’s see how this shakes out.

That posture made sense when generative AI still felt experimental—a tool you tested quietly, often without telling legal, compliance, or procurement what was happening under the hood.

That window is closing.

Not because regulators suddenly woke up—but because AI itself stopped behaving like an experiment. It became infrastructure. And infrastructure always gets governed.

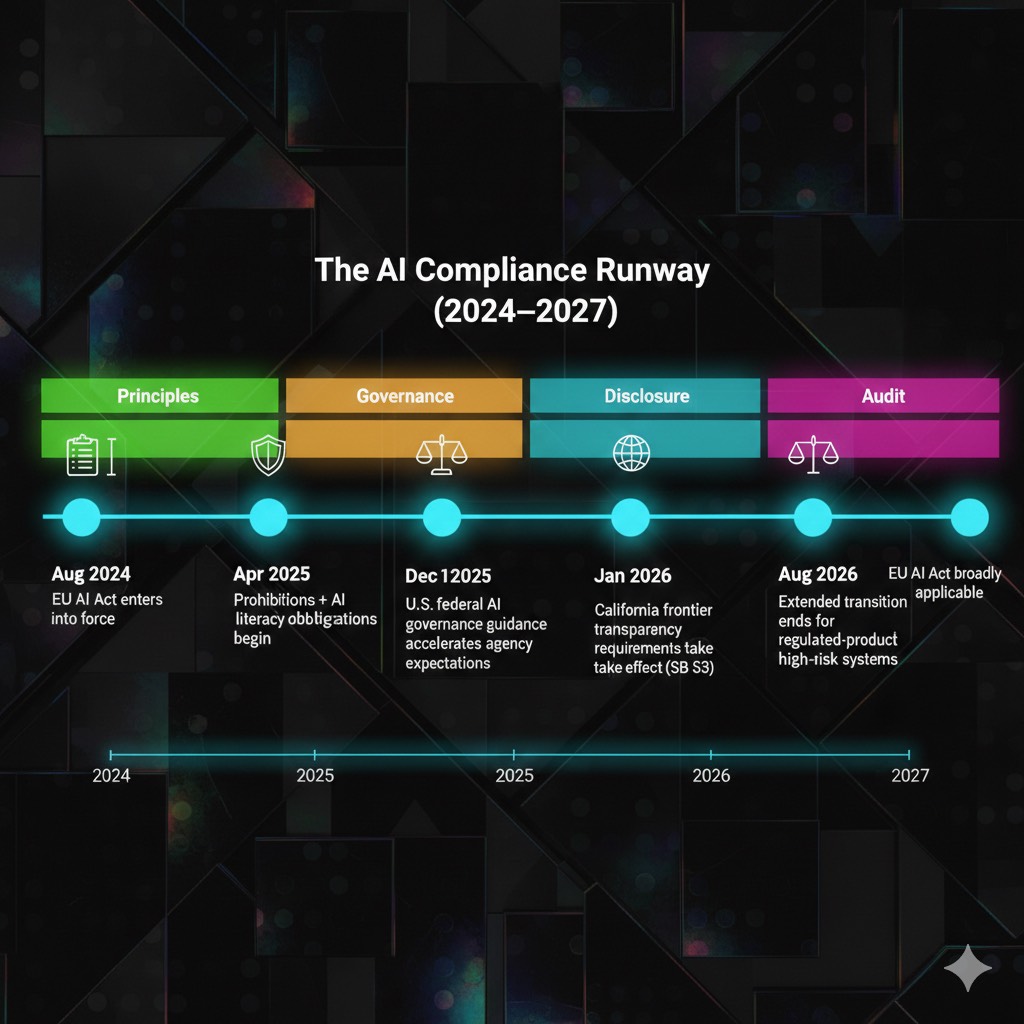

What most companies still miss is that AI compliance doesn’t arrive as a single moment.

It arrives as a timeline—gradual, layered, and unforgiving if you ignore the early markers.

The Quiet Shift From Principles to Proof

If you zoom out, the last few years were all about principles.

Ethical AI.

Responsible use.

Trustworthy systems.

Important ideas. Necessary framing. Also largely unenforceable.

What’s happening now is different.

Across jurisdictions, regulators are no longer asking what you believe about AI. They’re asking what you can document, defend, and monitor.

The new compliance reality isn’t a philosophy test. It’s an evidence test.

That shift—from values to proof—is the real story of AI regulation.

And it’s already underway.

Why AI Regulation Feels Confusing on Purpose

One reason companies underestimate what’s coming is that nothing seems to “flip” overnight.

There’s no single launch date.

No dramatic enforcement announcement.

No universal rulebook.

Instead, obligations phase in quietly:

• Some apply only to certain models

• Others depend on how AI is used, not what it is

• Many don’t bite until a year or two after adoption

That ambiguity isn’t accidental. It’s how regulators give organizations time to adapt—and how they later justify enforcement when they don’t.

AI compliance is not a cliff.

It’s a runway.

But runways still have an end.

The Timeline No One Is Paying Attention To

In the United States, the compliance clock isn’t being set by a single “federal AI law.” It’s being set by federal authorities using existing powers—and by governance mandates that quietly become the standard for “reasonable” practice.

Here are the federal signals most companies should stop ignoring:

- The Office of Management and Budget is operationalizing federal AI governance through OMB Memorandum M‑25‑21—the kind of document that looks internal until you realize it shapes procurement expectations and vendor requirements.

- The National Institute of Standards and Technology is standardizing the risk language many organizations will be judged against via the NIST AI Risk Management Framework (AI RMF).

- The Federal Trade Commission is reinforcing that there is no “AI exemption” from consumer protection law, and it’s tracking AI-related deception and unfair practices through its Artificial Intelligence hub.

- The Consumer Financial Protection Bureau has clarified how lenders must provide specific reasons in adverse action notices even when using AI and complex models—see CFPB guidance on credit denials using AI.

- The Equal Employment Opportunity Commission has made its posture increasingly explicit on discrimination risk from AI-enabled employment decision tools—see “What is the EEOC’s role in AI?” (PDF).

- The Department of Justice Civil Rights Division is building public resources at the intersection of AI and civil rights—see Artificial Intelligence and Civil Rights.

None of this feels like one big federal AI law. And that’s exactly why it’s easy to underestimate. It arrives as governance mandates, enforcement posture, and procurement standards—until one day it feels like the ground rules have changed.

Because they have.

California’s Signal: Name the Law, Don’t Hand-Wave It

Then there’s California, where the frontier-model debate didn’t disappear—it narrowed into something more targeted and harder to dismiss.

California Senate Bill 53 (SB 53)—the Transparency in Frontier Artificial Intelligence Act—puts transparency and incident discipline on the table for in-scope frontier developers.

The important takeaway isn’t whether your organization hits the frontier threshold today. It’s what this signals:

When systems are powerful enough, “trust us” stops working.

You need a framework you can publish—and behavior you can defend.

The EU’s Date That Should Be on Your Calendar

If your products, customers, or partners touch Europe, you also have a clear external line to prepare for.

The European Commission’s official EU AI Act timeline is a reminder that compliance isn’t hypothetical—it’s staged, and the “broadly applicable” moment is a real operational milestone in 2026.

Even for U.S.-based teams, this matters because EU readiness tends to become enterprise procurement readiness everywhere else.

Standards Are Becoming the Real Rulebook

At the same time, something else is happening beneath the headlines:

Voluntary standards are quietly becoming mandatory in practice.

Frameworks like the NIST AI RMF aren’t laws. But they’re becoming:

• procurement requirements

• board expectations

• audit reference points

• liability anchors

Because they solve a basic problem: they help an organization prove it has control.

And in this new era, control isn’t a nice-to-have. It’s the price of scale.

The Real Risk Isn’t Over-Regulation. It’s Delay.

The biggest compliance failures rarely come from defiance. They come from postponement.

AI governance is often treated as something you build after product-market fit, after scale, after revenue.

But regulation doesn’t wait for internal readiness. It measures whether you acted when the signals were clear.

And the signals are clear now.

The companies that will struggle most in the next phase aren’t the reckless ones. They’re the well-intentioned ones who assumed they had more time.

The Human Edge

AI compliance isn’t about fear.

It’s about foresight.

The human edge of AI is knowing when experimentation ends and responsibility begins—and building governance before someone else builds it for you.

Because AI has a clock now.

And it’s already ticking.

Read More

When AI Creates Value, Settlements Aren’t Arm’s-Length — They’re Arms-Twist

Disney, Midjourney select Hon. Suzanne H. Segal as private neutral for mediation

Warner Music Group and Suno Forge Groundbreaking Partnership

Lights, Camera, Licensing: How Disney and Warner Are Rewriting the AI Playbook

Lili Kazemi is General Counsel and AI Policy Leader at Anant Corporation, where she advises on the intersection of global law, tax, and emerging technology. She brings over 20 years of combined experience from leading roles in Big Law and Big Four firms, with a deep background in international tax, regulatory strategy, and cross-border legal frameworks. Lili is also the founder of DAOFitLife, a wellness and performance platform for high-achieving professionals navigating demanding careers.

Follow Lili on LinkedIn and X

🔍 Discover What We’re All About

At Anant, we help forward-thinking teams unlock the power of AI—safely, strategically, and at scale.

From legal to finance, our experts guide you in building workflows that act, automate, and aggregate—without losing the human edge.

Let’s turn emerging tech into your next competitive advantage.

Follow us on LinkedIn

👇 Subscribe to our weekly newsletter, the Human Edge of AI, to get AI from a legal, policy, and human lens.

Subscribe on LinkedIn